Bug Bounty program Management: A different perspective

A non-technical overview on what to consider when running a Bug Bounty program

In this post I want to share my thoughts about how to run a bug bounty program from a non-technical perspective. There are many resources out there for a more technical approach, so I wanted to offer a different view. If you want to dive into the technical details as well, check out the resources at the bottom of the article.

Content

1. Internal Considerations

There is little point in having a program, if you cannot deal with the issues that you receive! The basis of a good program is having a good team.

1.1 Management

To run a program well, you also need to manage the internal team well. There are a few core focus areas that are especially important for achieving this.

The first is to have activities scheduled. Running a program is by nature variable, the flow of reports is always changing. As such the engineers need to have tasks ready in the case that there is downtime.

Examples for activities are:

- Testing internal systems

- Documenting previous cases

- Performing root cause analysis of received issues

The same is true for the opposite! You need to have a plan ready for how to handle a sudden flood of reports. I also want to point out that you should still have dedicated team members, even though there may be downtime. This is to ensure that lower impact reports also get processed in a timely manner. Not having a dedicated team can lead to long handling times for low impact issues due to other tasks having higher priority.

The second is have clear role assignment. It is easy to have all engineers jump on exciting tasks with nobody picking up more trivial reports. Having members be in charge of reports and following them up is critical to run an efficient team. It also helps with making sure reports get resolved. It avoids everyone focusing on a single issue. That member also knows how the exploit worked, so they can confirm that the issue is properly resolved.

Finally, having a set format for reporting issues is critical to communicate well with developers. The impact and time to resolution for the given issue needs to be clearly stated. This reduces friction and results in everyone being on the same page.

All parties involved know what to expect!

This allows for a smooth escalation of both critical and minor issues. It also avoids confusion or placing blame which can happen in high pressure situations.

1.2 Organization

It is also important for the team to be organized well. It allows for rapid responses and sets the team up for success. As mentioned above, there will be both downtime and busy periods, so the team needs to be flexible.

The team should have tasks that do not have strict deadlines and can be paused while triaging reports. Penetration testing internal tools and services is a good example of this type of tasks. The risk is low since only employees have access. It usually requires local network access, which further reduces risk. It is an important aspect of defense-in-depth though, and should not be neglected. Another task that is well suited is doing risk assessments for low priority services.

The skillset and tools required to do well in bug bounty resembles those of an internal red team. The types of work are also compatible, since internal red teams rarely have urgent tasks. They also work with the CSIRT team regularly, reducing friction when having to do incident response for a report.

What can be automated, should be automated!

Applying automation to the following actions is a must for smooth operations:

- Ticket creation

- Member assignment

- Ticket status updates

Automation also removes the chance of human error occuring. The more human interactions, the higher the chance of a mistake.

Therefore human input should be kept at at minimum, especially for processes dealing with sensitive data.

An example flow is as follows:

Receive human input -> Double check (verify / reject only) -> Output

A perfect illustration of where to apply this is for bounty payouts. The application can be as follows:

Team member A inputs bounty -> Team member B confirms sum -> Bounty is paid

Manual work is the killer of motivation, efficiency and robustness. You should strive towards having a system where the team can focus on the following tasks:

- Triaging the report

- Verifying the fix

- Communicating with the reporter

- Deciding the bounty

Tasks that are unrelated to engineering should be kept to a minimum. Here are a few examples:

Identifying which department is in charge of a service

Identifying which part of the code is vulnerable

Preparing the paperwork for paying out bounties

These tasks are menial and you can easily automate them at scale.

If you are considering starting a program you should set up this automation before receiving your first report!

2. External interactions

What is shared externally defines how your program is perceived. It is hard to decide on bounty ranges and scope. Yet it pales in comparison to communicating well with reporters.

2.1 Reporting

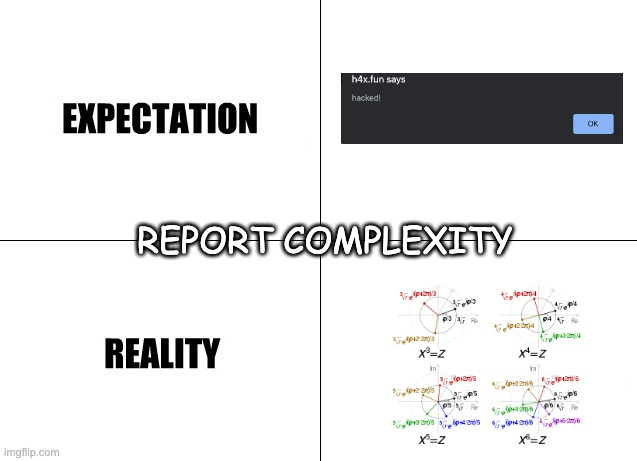

The communication naturally starts when you get a report. Being pre-emptive and telling the reporters what you want to know and how you want it to look is extremely helpful.

Knowing what to expect from the content of a report reduces the frustration for the team receiving the report. All triagers have received a report where the content is all mixed up, making it hard to understand the issue. A good format also makes it easier to understand the content at a glance. This sets you up to succeed. The team is happy and the chance of the report being resolved fast increases.

A good template can be found here

I prefer this format:

- Overview

- Summary

- Impact

- Details

- PoC

- Mitigation / Prevention

- Testing Environment

In the Testing Environment section you should add:

Special headers, User Agent, IP address, App version, etc.

This makes it easier for the triaging team to identify your testing when checking logs.

2.2 Communication

First of all, respond!

Being ghosted sucks. Keeping hackers up to date is important, but time consuming. Rather than trying to treat the conversation as a casual chat, set expectations and follow through on the promises you make.

Tell them when you will get back to them. If they don't hear back by then, they will remind you. If reporters nag about the status of reports, you are likely not setting expectations properly. It can be annoying, but understand it is likely due to giving vague replies and not following up properly.

Furthermore, setting expectations and not following through is the same as not replying. If you say you will get back to the reporter this week, you have to reply this week! You don't have to resolve the issue, but at least give a simple update. Even if you have no new information, let the reporters know they haven't been forgotten.

There are also 3 core principles I like to use when communicating with reporters (and triagers):

- Be kind

- Be polite

- Be firm

Give people the benefit of the doubt! If you are angry or frustrated, let someone else handle the report or do it once you've calmed down. Treat people with respect.

The second point I regard as common courtesy. The reporters / triagers are not your friends, and if they are, you are in a professional setting. Nobody expects a formal letter, but it is usually not appropriate with emojis, 1337 h4x0r terms, etc. either.

Make your report easy to understand!

For the third point, make it clear what is and isn't accepted. You are already giving reporters the benefit of the doubt. If you have explained why the report is not considered valid twice already, it is OK to say:

Unless you can show further impact via PoC, we now consider this report closed.

It clearly conveys what is expected and allows everyone involved to spend their time efficiently.

It is hard to find the correct balance between enabling discussions and being firm. I usually live by three time's and you're out! What I mean by that is, I will discuss the same issue at most 3 times:

- The first time I will explain in depth.

- The second time I will clarify and explain in other words.

- The third time I will clarify and end the discussion.

It gives a nice balance between providing proper context while avoiding long arguments. As with all things, exceptions apply!

Another important aspect is encouraging members to give guidance to reporters submitting low quality reports. Someday, the reporter might come back after having learned more and find a critical bug.

Don't just reply Out Of Scope, No Impact or Invalid!

Describe why you consider it as such, so reporters learn how to contribute to your program. By explaining your evaluation, you can help them towards providing valuable reports. If they do not agree with your assessment, they can focus on programs that better align with their views, leading to less noise for your team.

This helps avoid the scenario where reporters keep submitting reports of no value to your program, wasting everyones time.

2.3 Cooperation

This is both the easiest and the hardest to achieve. Try to remember and understand that both sides hopefully have the same goal - to fix the issue! Keeping this in mind when interacting is important.

Try to think of what it would be like if you were on the other side - regardless if you are the reporter or the triager.

It is frustrating to try to explain an issue to someone who does not understand. Especially after repeated attempts to do so. You are just trying to help!

On the other hand it is also frustrating to try to explain that your organization does not consider an issue valid. There is no point in arguing your report is valid if the triage team understands the issue and tells you otherwise. Typical examples are Google Maps API keys and Open Redirects with no further security impact.

It is important - from both sides - to remember that reporters are sharing potential issues to an organization. The organization can then decide if they consider it an issue or not.

Acting in good faith means that the organization should seek to reward those who contribute, but they are not obliged to do so. You volunteered to look for bugs in their service!

As reporters, it is important that we remember that we are not entitled to a reward! As triagers, it is important to keep in mind that a person spent their time trying to assist your company (regardless of the quality of the report).

Instead of thinking of it as us and them, reporters and triagers, we are one team!

We work together towards providing safer services!

In closing I would like to clarify that this is the ideal image that I think programs should aim for. It is not realistic to expect perfection in every situation. Instead we should all look for continued improvement and learning.

If you got this far, thanks for reading! As thanks, here's a puppy picture!

Cheers!

As promised here are the resources that discuss bug bounties from a technical approach:

- Coordinated Vulnerability Disclosure: You’ve Come a Long Way, Baby - RSA - Katie Moussouris & Chris Wysopal

- The Bug Bounty Guide - EdOverflow

- Reported to Resolved: Bug Bounty Program Manager - PascalSec interviewed by InsiderPhd

- Setting up bug bounties for success - lcamtuf

- Sharing the Philosophy Behind Shopify's Bug Bounty - Shopify